I recently heard about one of the worst pieces of AI technology that I have ever come across. This product is simply called Friend and is the newest concept in the AI industry. If you haven’t seen it before, let me explain to you what this is. Friend is an AI device that takes the form of a necklace with a pendant on it basically designed to be your own AI friend.

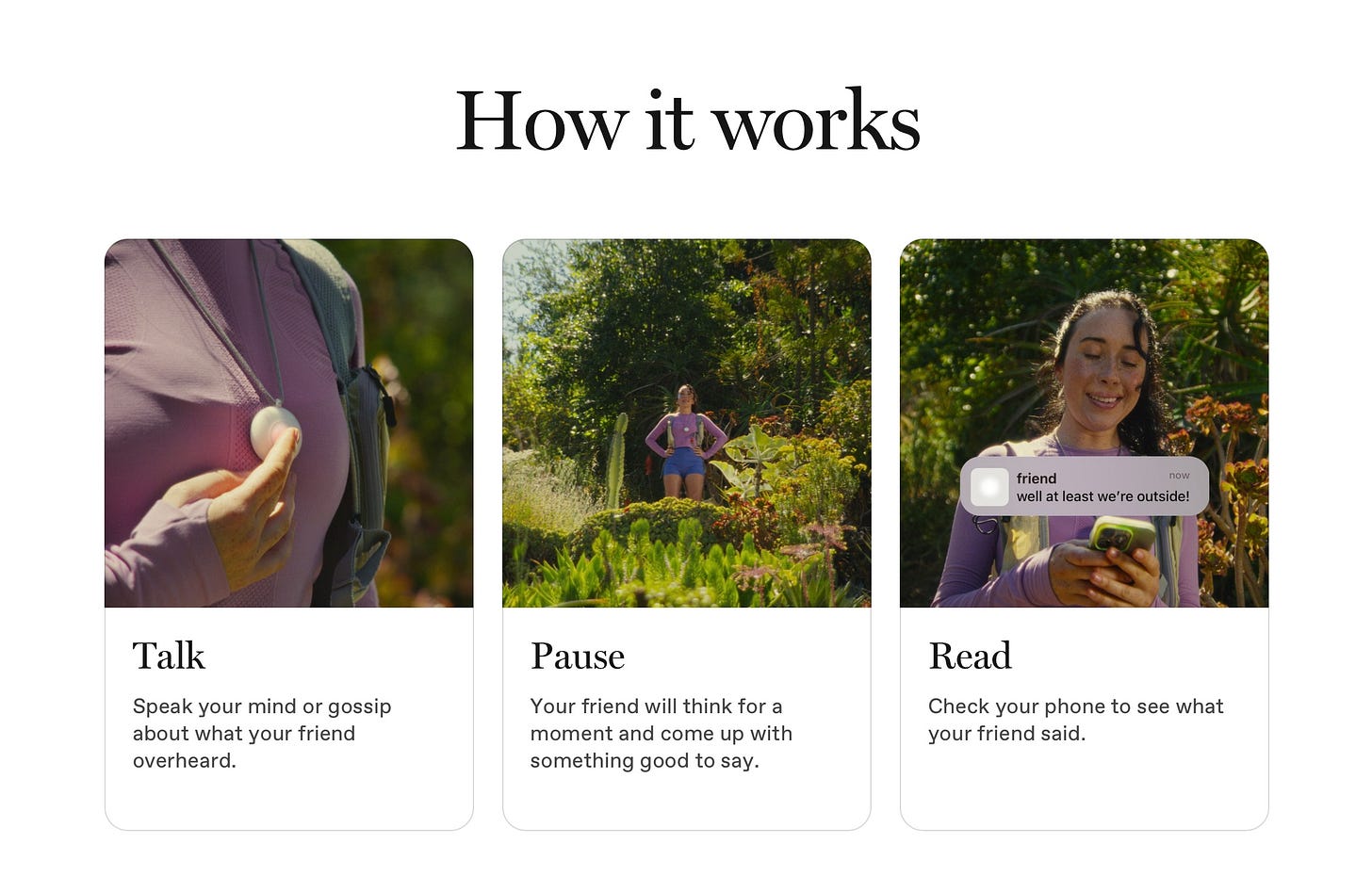

It uses a built-in microphone to listen to you throughout the day, and uses the information that it gains to send you text messages based off your current context. All you have to do is talk (whether to yourself, someone else, or to the device itself), and the AI model will take a few moments to think before sending you a text message on your phone.

In the reveal trailer, which was published on multiple social media platforms, people are portrayed going about their daily life as this AI model texts them messages. For example, one person had ordered some food, and the AI texted her and asked her how it tasted. You can watch the video below to see some more examples:

The device is expected to come out in January 2025 and cost $99. Even though this product hasn’t even come out yet, there are two HUGE problems that come to my mind. The first is that this device is always listening to you. I don’t know about you, but I don’t really want any device listening to me, especially one that is made by a company that we have never heard of before. According to the website, “No audio or transcripts are stored past your friend’s context window. Your data is end-to-end encrypted. All memories can be deleted in one click within the friend app.” What exactly defines the “context window” though? Is my friend’s context window 30 minutes, 1 day, or a whole year? Companies, such as Apple, go to great measures to ensure that your data is protected and can’t be accessed by anyone, even the companies’ own employees. How are we to be sure that this company isn’t harvesting data? Now, I don’t want to assume the worst. Maybe this company only has good intentions in mind, but I don’t enjoy the idea of having an AI device that is dedicated to listening to me 24/7.

The second problem that I have with this device is the ethics behind a device like this. While AI chatbot “friends” have been out for a while now, this takes it to the next level. People are meant to befriend and talk with other people, not AI. By giving AI feelings and personality, which is essentially what this product is doing, we are elevating it to the level of being human. Avi Schiffmann, the creator of this project, claims that part of the reason this device was created was to help solve the problem of loneliness. While this may seem plausible at first, I would argue though that this only makes the matter worse. Instead of having real people come alongside someone who is lonely and befriending them, we are letting AI do it instead. The truth of the matter is that AI doesn’t have feelings. AI can’t connect with people on a level that humans can because it is simply technology.

Now, I don’t want to sound like a conspiracy theorist and say that AI is going to take over the world and destroy humanity, but I do think that it is possible that we become too dependent, and in this case, too emotionally attached to AI. As mentioned on the Friend website, “Your friend and their memories are attached to the physical device. If you lose or damage your friend there is no recovery plan.” If you damage this thing, your “friend” is gone and there is no way to get him/her back. The whole idea of even calling this device your “friend” honestly just makes me cringe. Instead of going out into the world and meeting other humans, we are turning to AI devices that have no feelings and no personality to confide in, talk to, and befriend. It doesn’t get much more dystopian than that. Don’t get me wrong, I’m excited about a lot of the AI technology that has been introduced recently, but things like this honestly make me disgusted and worried about the direction the human race is going. As you can probably tell, I don’t really like this product and will not be purchasing it when it comes out. If you would like to learn more, you can visit the website here.

Wow!! Crazy!

I definitely agree that AI as a friend will only make loneliness worse. In person relationships is something that technology can never replace. Look, for instance, at social media. Despite its attempts to alleviate loneliness, it has, in many causes, made the problem much worse by causing them to spend time socializing with digital friends, rather than real ones. AI is made to be an assistant, and while human-like qualities are convenient, they should be used in order to help understand a user's request--NOT to form a relationship with the user.